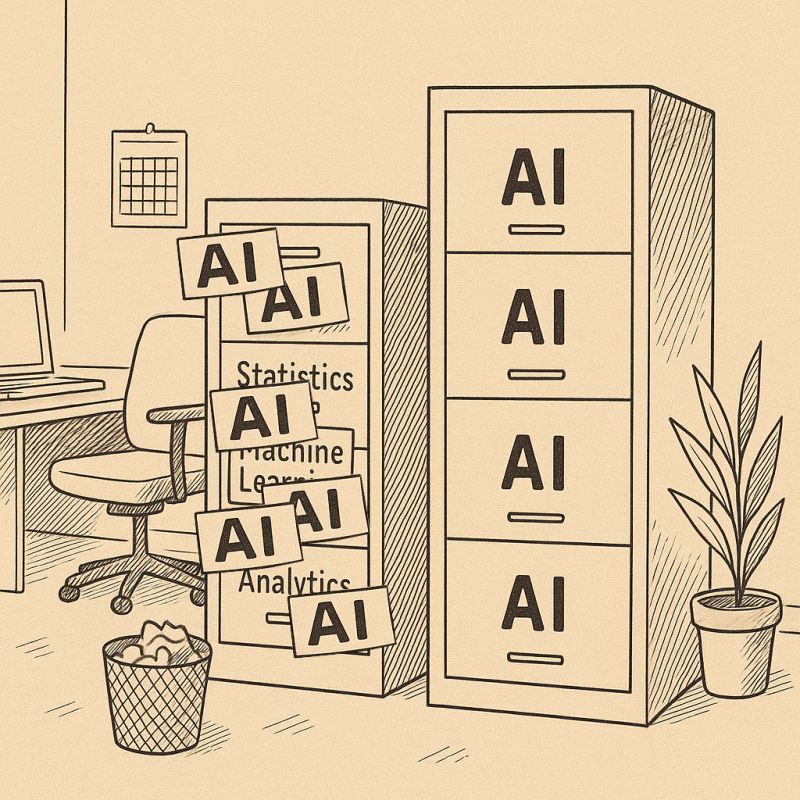

Yesterday we labeled everything AI; today we might too often forget what Machine Learning and statistics do well.

We've managed a peculiar feat in AI: simultaneously overhyping and underappreciating the technology. For years, the industry branded every statistics tools as "Artificial Intelligence" despite their limited resemblance to genuine intelligence. This inflation of terminology served marketing well but sowed confusion.

Now we face the inverse problem. With the arrival of generative AI systems truly deserving the label, we encounter a new misconception: that these systems are simply more powerful versions of traditional methods, suitable for the same decision-making tasks.

Of course today's foundation models are built with Machine Learning techniques, but there is a crucial difference: With “traditional” Machine Learning, we are working on structured data towards a well-defined goal. With foundational models (i.e., "AI"), we are using unstructured data to construct general-purpose models.

Putting these technologies in the same basket represents a fundamental category error. Large language models excel at generating human-like content and processing unstructured data. They can create compelling text, images, and dialogue while transforming unstructured information into structured formats. However, they fundamentally lack the consistent decision-making capabilities of purpose-built systems.

Traditional approaches—from regression to random forests to specialized neural networks—remain superior for structured decision tasks. They offer reliability, consistency, and interpretability that foundation models cannot match, with fewer unexpected errors and clearer testing metrics.

This distinction shapes effective deployment. Using language models where traditional machine learning would suffice is like using a paintbrush to hammer a nail. Conversely, overlooking generative AI's breakthroughs in language understanding by focusing on the factual errors and hallucinations squanders its revolutionary potential.

Our tendency to simplify complex technological distinctions into labels leads us to either undersell innovation or overextend its applications. The reality demands nuance: these are complementary approaches with distinct strengths and limitations.

Precision in how we discuss these technologies is essential. Foundation models represent a breakthrough for language tasks but do not render traditional approaches obsolete—particularly where consistency is paramount. This isn't merely academic—it determines how effectively we harness these tools and deploy them responsibly.

In our rush to embrace the new, we must not forget what the old does well—or we risk building the future on foundations of sand.