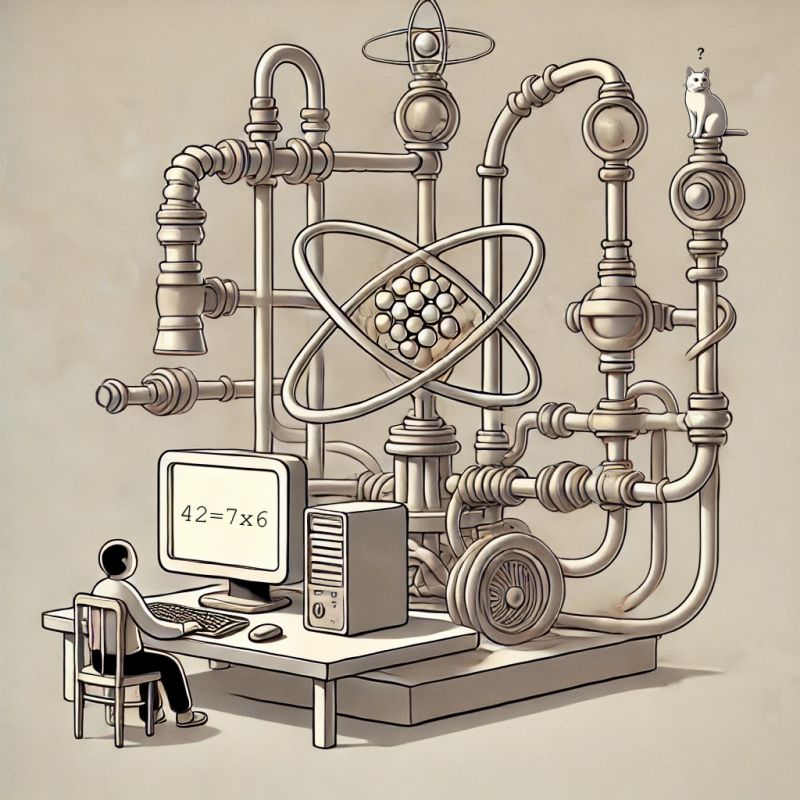

Quantum computing continues to generate a lot of buzz. But having delved deeper into the subject recently, I find myself increasingly skeptical.

At the heart of the excitement are incredible promises made by the proponents of quantum computing: Life-saving drugs in record time! Global logistics problems solved effortlessly! Improved AI performance! Optimized financial markets!

But here’s the issue: It’s hard to find solid evidence to back up the hype. Rather, when examining quantum computing as a near-term practical technology, several critical issues emerge:

-

Quantum computers remain incredibly fragile and error-prone. Even tiny disturbances cause so-called decoherence, ruining calculations. Error correction is advancing, but still requires massive overhead and is nowhere where it needs to be. The largest quantum computers today have around 1000 noisy physical qubits (beware the difference here between physical and logical qbits!). As it stands, we likely need millions of physical qbits to do anything useful. Have we seen such exponential advances in classical chip manufacturing? Yes. But the mere fact that we’ve seen them for classical chips provides little evidence that quantum computing will achieve the same.

-

Quantum speedups are not universal. Besides factoring large numbers and simulating quantum systems, only certain niche problems are currently known to get the coveted quantum speedups (most of them quadratic, not exponential!). The "quantum supremacy" papers that have been popping up recently - even those that stood up to scrutiny - involve quite contrived scenarios that often sound a bit too much like “a quantum computer is really good at simulating a quantum computer”.

-

We're still very far from practical quantum advantage versus optimized classical algorithms. In many ways, quantum systems are much slower than classical ones in terms of operating a qubit (vs. switching a transistor) and data bandwidth. A recent study has shown, for example, that even a fault-tolerant quantum computer with 10,000 logical (!) qubits would have to run for several centuries before it would actually outperform a not-so-new Nvidia A100 on a useful problem.

Don't get me wrong: quantum computing is fascinating science. But the practical challenges are immense and the purported benefits may be overstated. Add to that that the timeline keeps slipping: In the early 2000s, experts predicted useful quantum computers by 2020. Now even optimists are talking about mid-2030 or beyond.

They say there’s a fine line between vision and imagination. With quantum computing, it feels like we’re balancing on a tightrope between the two. Investors that are currently pouring billions into quantum may find themselves waiting a long time for returns.

P.S. If I have to eat my words in five or ten years, then kudos to all the brilliant minds that have made it happen (and congratulations on winning the $5M XPrize (https://www.xprize.org/competitions/qc-apps)!