A very expensive lie in data today? "Ready-to-serve data for every use case."

The promises are seductive: universal definitions that work for everyone, self-service analytics without context switching, numbers that align across the company without argument.

But meaning doesn't work that way. Data is always recorded in a specific context and only makes sense inside one. A "customer" means booked revenue to finance, an opted-in prospect to marketing, an active user to product, and a “data subject” to legal. Each definition is correct for its purpose. None transfers without losing critical nuance.

Standardize too broadly and you get definitions so diluted they help no one. Standardize too narrowly and you get precision for one group that's fundamentally wrong for others. Time makes things even harder, with market shifts, product evolution, regulation updates and changing customer behavior.

Any “universal” system must either anticipate every variant—becoming unwieldy—or ignore variation, quietly accumulating errors that surface at critical moments. The results are costly: impressive dashboards that work for reviews but crumble when someone needs to make a decision that actually matters. Meanwhile, teams build workarounds because the official path is too slow or inflexible.

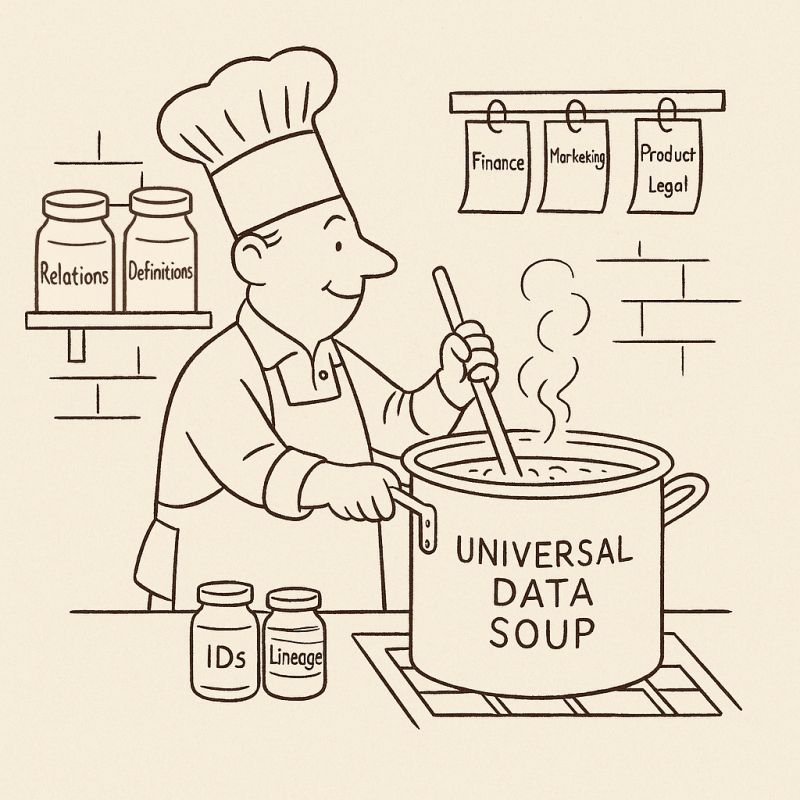

Universal data is like promising individually crafted meals for millions, delivered instantly. It’s a utopia. A more honest approach is to treat data like a well-organized pantry instead, stocked with clearly labeled ingredients: unambiguous IDs, consistent time measures, documented relationships, transparent lineage.

Keep components small, versioned, and documented. Then let the people closest to the questions do the final preparation. They carry both the context of what they're asking and the consequences of getting it wrong. They'll notice when definitions drift and have incentives to fix problems quickly.

Yes, this requires coordination and discipline, but it's achievable. Central teams maintain standards and tools. Domain teams own their recipes and share what works, building institutional knowledge rather than hoarding it. And common questions can still get pre-built answers, but treated as dated solutions with precise assumptions and context, not universal truths.

The tests are simple: First, does your approach shorten the distance from a live business question to an informed action that survives scrutiny? Second, when someone asks how you knew something, can you trace back through the specific assumptions that shaped your answer? Not in a demo, but in day-to-day business.

It's messier than the slick tool demo where everything always fits together nicely. But it feeds the business when it's hungry.